Professional Projects

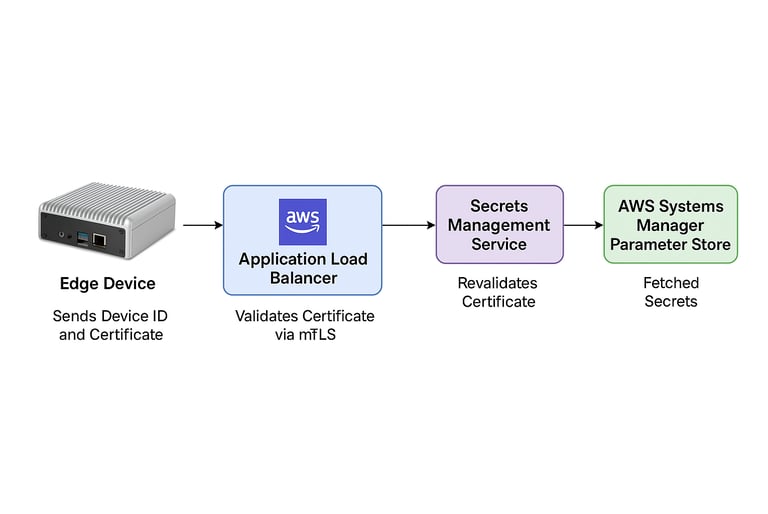

Secrets Management System

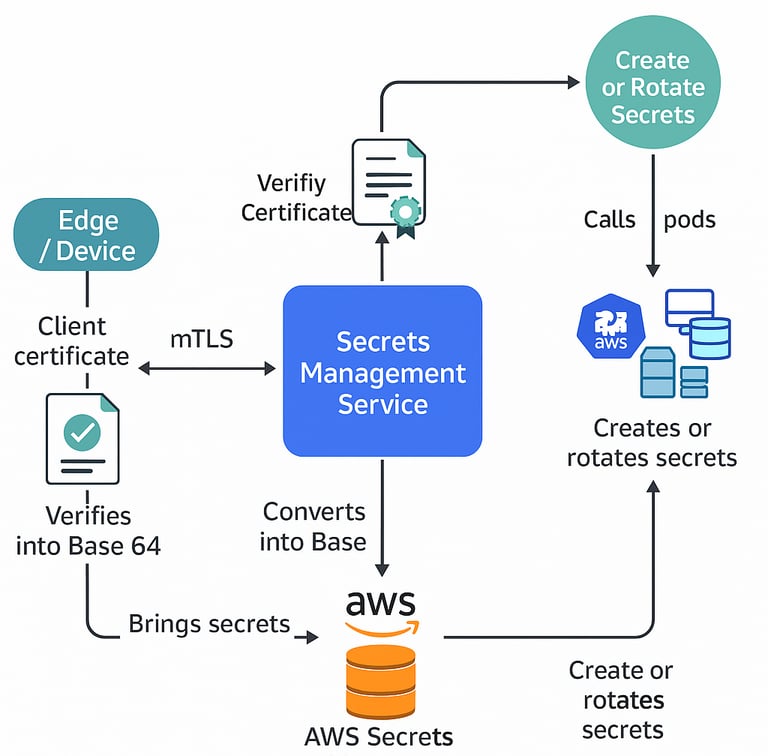

The Secrets Management System is a security-focused platform developed to manage and protect sensitive credentials (API keys, DB passwords, certificates, etc.) across multiple environments. Traditionally, secrets were stored in plain text files, which exposed systems to high breach risks. Our solution addresses this by introducing encrypted storage, centralized management, and secure distribution.

Key Features:

Centralized Secrets Repository: All secrets are stored in AWS Parameter Store in encrypted format with automatic versioning and rotation policies.

mTLS (Mutual TLS) Security: Edge devices and services communicate securely with the backend through AWS ALB + mTLS validation, ensuring both client and server identity verification.

Microservices Architecture: Built using .NET 8 (Clean Architecture + Autofac + Serilog) and deployed as independent microservices in Kubernetes for scalability and reliability.

Kubernetes Integration: Secrets are mounted via K8s volumes & ConfigMaps, ensuring least-privilege access and dynamic updates without service restarts.

Audit & Logging: Implemented centralized logging with Serilog and structured tracing for full visibility of secret access patterns.

Rotation & Lifecycle Management: Secrets are rotated periodically to reduce exposure, with automated retrieval and distribution to dependent services.

Tech Stack:

Backend: .NET 8, C#, Clean Architecture, Autofac, Serilog

Security: AWS Parameter Store (KMS encryption), AWS ALB, mTLS (X.509 certificates)

Infrastructure: Kubernetes (AKS/EKS), Docker, ConfigMaps, Secrets volumes

CI/CD: Codefresh pipelines with automated builds, tests, and Helm-based deployments

Impact:

Eliminated risks of plain-text secret leaks.

Enabled secure, tenant-aware, and scalable secrets distribution across edge devices and cloud services.

Improved compliance posture (HIPAA, SOC2, ISO27001) by enforcing encryption, access control, and audit trails.

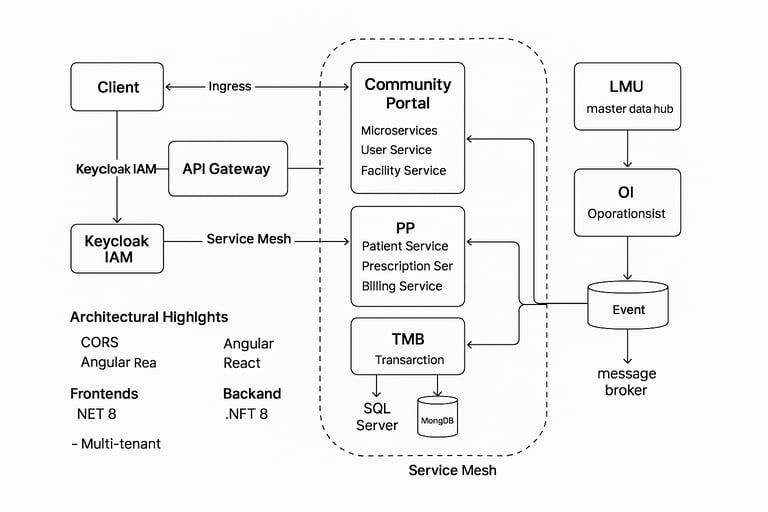

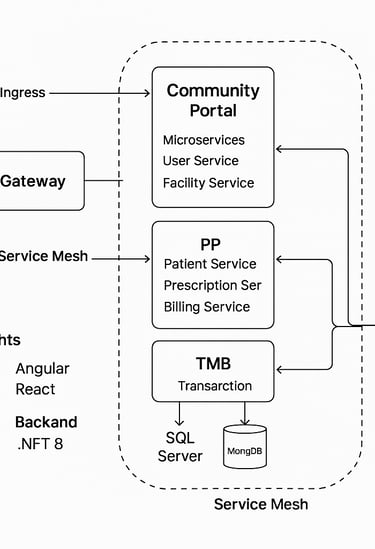

Community Portal

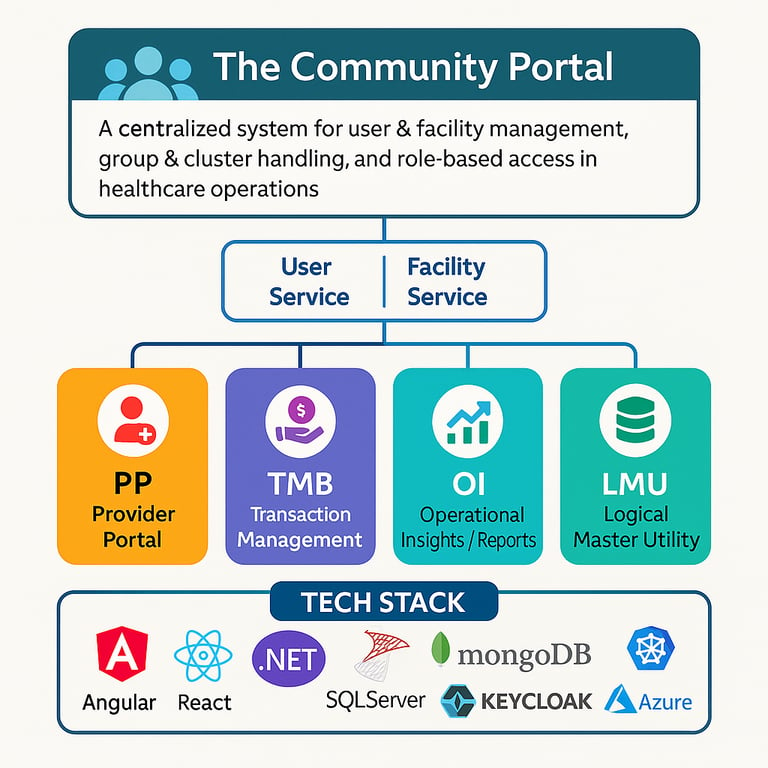

The Community Portal is a centralized system designed to manage facilities, users, groups, and clusters, ensuring seamless coordination across multiple healthcare operations. Its primary focus is user management and facility management, serving as the foundation for other integrated applications within the ecosystem.

The solution is architected as a microservices-based platform to achieve modularity, scalability, and maintainability. Each application serves a clear purpose and communicates with others via well-defined APIs, enabling a cohesive yet loosely coupled architecture.

Applications & Microservices

Community Portal

Microservices: User Service, Facility Service

Functionality: Centralized user & facility management, group & cluster handling, role-based access

PP (Provider Portal)

Microservices: Patient Service, Prescription Service, Billing Service

Functionality: Patient registration, prescription management, medicine dispensing, billing workflows

TMB (Transaction Management Backend)

Focused on secure and reliable transaction processing across systems

OI (Operational Insights / Reports)

Provides reporting and analytics, aggregating operational data across domains

LMU (Logical Master Utility)

Serves as the master data repository, consolidating and normalizing data from all defined resources

Architectural Highlights

CQRS (Command Query Responsibility Segregation): Optimized reads and writes with SQL Server (for transactional consistency) and MongoDB (for scalable queries).

Saga Patterns (Choreography & Orchestration): Ensures reliable long-running workflows such as patient registration, billing, and prescriptions across microservices.

Microservices Design: Independent deployable services with clear bounded contexts.

Identity & Access Management: Integrated with Keycloak for authentication, authorization, and role-based access control across all services.

Tech Stack

Frontend: Angular, React

Backend: .NET Core / .NET 8 (Microservices Architecture, Clean Architecture principles)

Databases: SQL Server (transactional data), MongoDB (query models & high-volume reads)

Identity & Security: Keycloak (SSO, RBAC, OAuth2, OIDC)

Infrastructure: Kubernetes, Docker, CI/CD pipelines (Helm-based deployments, automated builds & tests)

Patterns: CQRS, Saga (Choreography & Orchestration), Event-Driven Communication

Impact

Provided a centralized, tenant-aware platform for managing healthcare facilities, patients, and transactions.

Improved data consistency across multiple applications through LMU (master data hub).

Increased scalability & maintainability by adopting microservices, CQRS, and event-driven patterns.

Enabled secure multi-tenant identity management with Keycloak.

Delivered modular extensibility, allowing new microservices to be added without disrupting existing workflows.

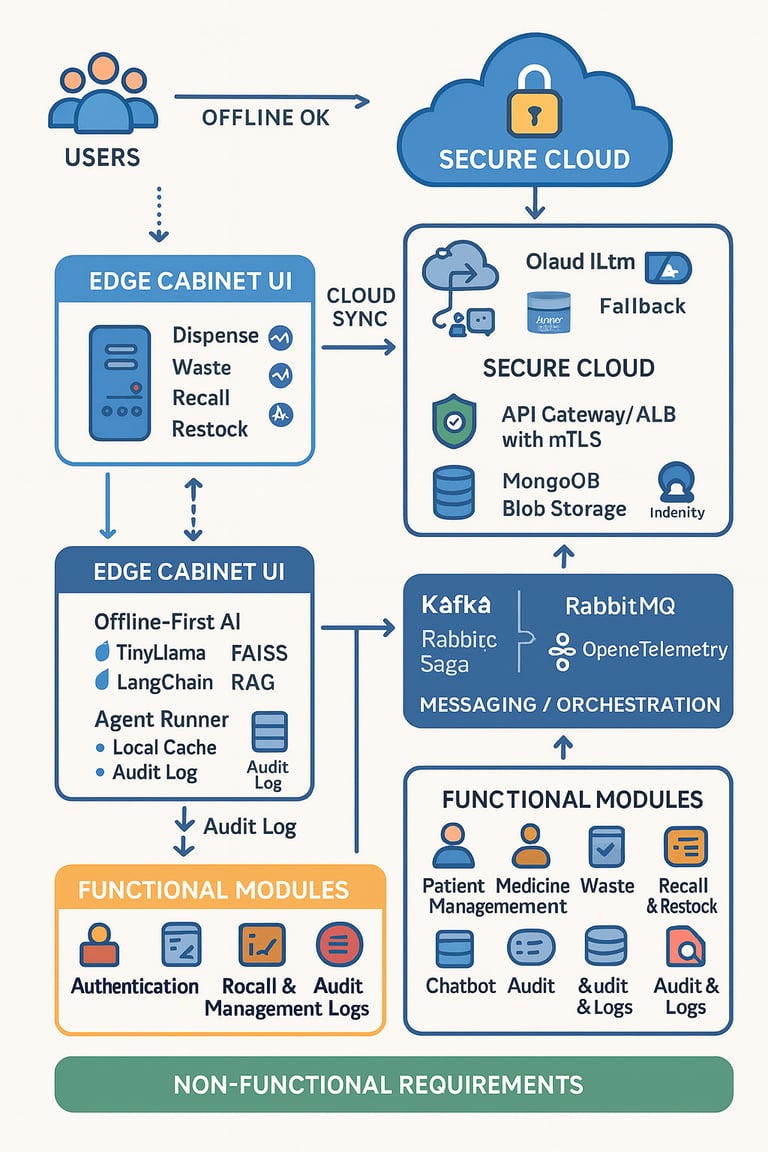

AI Driven Edge Health Chatbot

Overview

A secure, AI-enabled, cloud-integrated platform that modernizes medication workflows—dispense, waste, recall, restock—with robust offline edge capability and a natural chatbot interface. The system blends on-device AI (TinyLlama + FAISS + LangChain) with a cloud fallback LLM to deliver low-latency assistance and decision support at the cabinet, while securely syncing with backend microservices.

Key Features

Offline-First AI at the Edge: TinyLlama runs on the device for sub-second guidance; FAISS stores SOP, logs, and inventory embeddings for semantic retrieval via LangChain RAG.

Cloud Fallback LLM: When online, queries can route to an Azure-hosted LLM for heavier reasoning—transparently to the user.

Agentic Actions: An Agent Runner safely invokes backend microservices (Medicine, Inventory, Waste, Recall/Restock, Patient) to fetch live data or execute workflows.

Secure by Design: mTLS between edge and cloud; RBAC/SSO (Keycloak / Entra ID); encrypted data at rest and in transit; detailed audit trails.

CQRS + Event-Driven: Transactional writes in SQL Server; high-volume read models and telemetry in MongoDB; Kafka/RabbitMQ for reliable saga orchestration.

Observability: Centralized logs (Serilog), traces/metrics (OpenTelemetry), dashboards (Grafana/Prometheus).

AI & RAG Subsystem

Mini LLM: TinyLlama on device for fast, offline responses.

Vector DB: FAISS stores embeddings for SOPs, logs, and inventory.

RAG: LangChain retrieves context from FAISS and composes prompts for TinyLlama.

Fallback: If connected, an Azure LLM handles complex queries.

Agents: Safely call backend services (Medicine, Inventory, Waste, Recall/Restock, Patient) to read/act on real data.

Functional Modules

Authentication: Password, fingerprint, or face/vision; token-based sessions.

Patient Management: Patient lists, assigned prescriptions, clinical context.

Medicine Management: Stock view; dispense/waste/recall/restock/remove; batch/expiry tracking.

Inventory: Per-cabinet inventory; alerts and thresholding.

Waste: Capture structured reasons; reconciliation and audit.

Recall & Restock: Manual + automatic flows; supplier/EHR hooks (extensible).

Chatbot: Edge-local Q&A, cabinet status, guidance on SOPs; upgrades to cloud LLM when available.

Audit & Logs: Every action stamped with user/time/device and retained per policy.

Non-Functional Requirements

Availability: 99.9% at edge (offline capable); resilient cloud sync.

Security & Compliance: TLS/mTLS, RBAC/SSO, encryption; HIPAA-aligned design for PHI/PII protection.

Performance: Chatbot ≤ 500 ms edge response typical; critical APIs ≤ 1 s.

Scalability: Add/scale microservices independently; partition by tenant/cabinet.

Deployment: Dockerized edge (Linux); cloud on Kubernetes; GitOps/Helm deployments.

Tech Stack

Edge: TinyLlama, FAISS, LangChain, Agent Runner (Python/.NET), Local Cache (SQLite/Files), Linux.

Frontend: React/Angular (Touch UI for cabinet + web console).

Backend: .NET 8 microservices (Clean Architecture, Autofac, Serilog).

Data: SQL Server (transactions & PHI), MongoDB (query/telemetry), Blob Storage/Data Lake (SOPs, artifacts, logs).

Messaging/Orchestration: Kafka or RabbitMQ; Saga patterns (choreography/orchestration).

Identity: Keycloak / Entra ID (OIDC/OAuth2, SSO, RBAC).

Cloud LLM: Azure-hosted model (fallback/online).

Observability: OpenTelemetry, Grafana/Prometheus, centralized logging.

Impact

Safer operations: Guided, auditable medication workflows—reduced errors and faster actions.

Always-on: Offline AI keeps cabinets useful during outages; cloud augments when online.

Faster onboarding & training: SOP-aware chatbot reduces cognitive load and training time.

Future-proof: Modular microservices + agentic layer make it easy to add capabilities.